Green Cotton

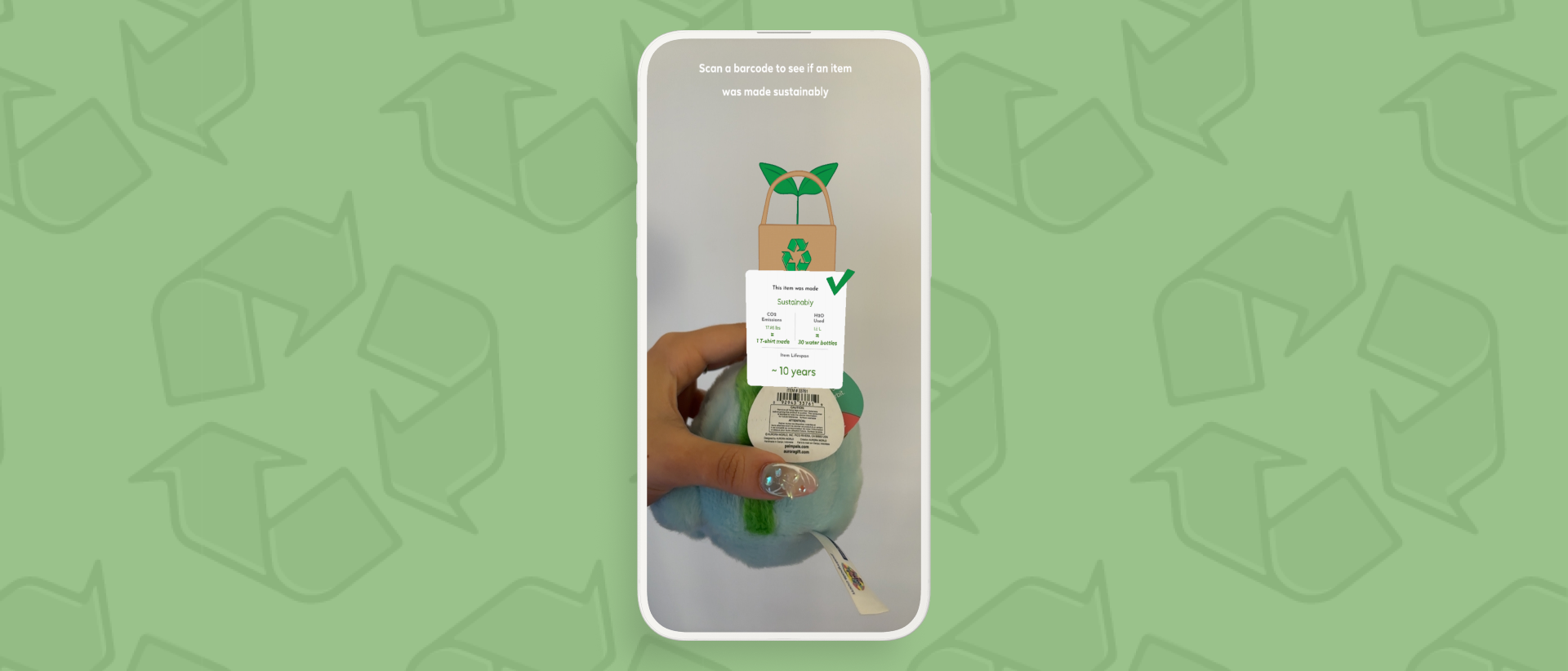

An AR mobile product that efficiently helps users make informed eco-friendly shopping choices.

An AR mobile product that efficiently helps users make informed eco-friendly shopping choices.

In the face of climate change, consumers struggle to find affordable and

sustainable options. The NYC

Fair

Trade Coalition addresses this by offering thrifted clothing at affordable prices and

facilitating

clothing

exchanges.

However, discovering sustainable stores is challenging, as they are often not

well-advertised. Mainstream brands lack transparency, leaving shoppers uncertain about their

purchases'

sustainability. There is also a knowledge gap in understanding sustainability in fashion, making

informed choices difficult for consumers.

If the NYC Fair Trade and other sustainable small business are doing their part, then what’s the problem?

Accessibility, convenience, and transparency.

Which begs the question:

How Might We...

Some possible challenges that I would've encountered with this project were::

I had to understand what in the fashion industry is harming the environment, and the target users pain points and frustrations with their current sustainable shopping experiences.

I found that fast fashion, driven by social media trends, significantly contributes to global warming due to carbon emissions and excessive water usage in mass production. Additionally, the mass production of clothing leads to less durable garments, decreasing their lifespan and contributing to increased waste.

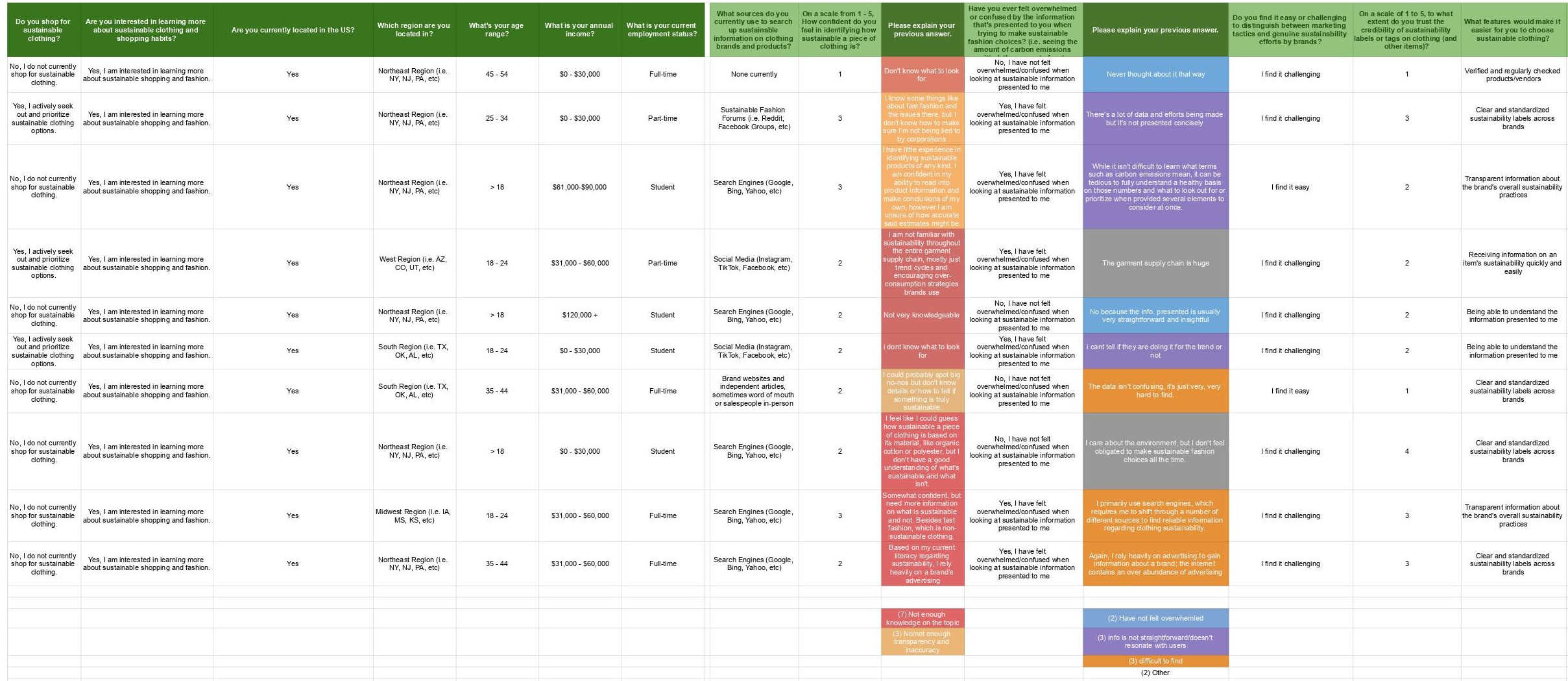

(n-count = 11)

To gather insights, I conducted a >5 minute survey targeting U.S. residents via Google Forms, distributed on platforms like Reddit and Discord. The aim was to grasp the sustainable shopping experience for both seasoned eco-shoppers and newcomers, identifying pain points and successful strategies.

Here's a quick overview at all the survey questions and responses:

And here are the key findings:

Most respondents are not confident in identifying if a piece of clothing is made sustainably due to a lack of knowledge on where to find manufacturing information online and what to look out for.

“Somewhat confident, but need more information on what is sustainable and not. Besides fast fashion, which is non-sustainable clothing. ” (18-24, full-time employment, Midwest Region)

Many feel extremely overwhelmed by the sustainable information they do find, as it is often presented as numbers and statistics that don’t resonate with them.

“While it isn't difficult to learn what terms such as carbon emissions mean, it can be tedious to fully understand a healthy basis on those numbers and what to look out for or prioritize when provided several elements to consider at once.” (> 18, student, Northeast Region)

Most respondents do not trust the sustainability labels on clothing items.

“I cant tell if they [companies] are doing it for the trend or not” (18-24, student, South Region)

With my new findings, I decided to keep the following in mind while thinking of what the mobile product should include:

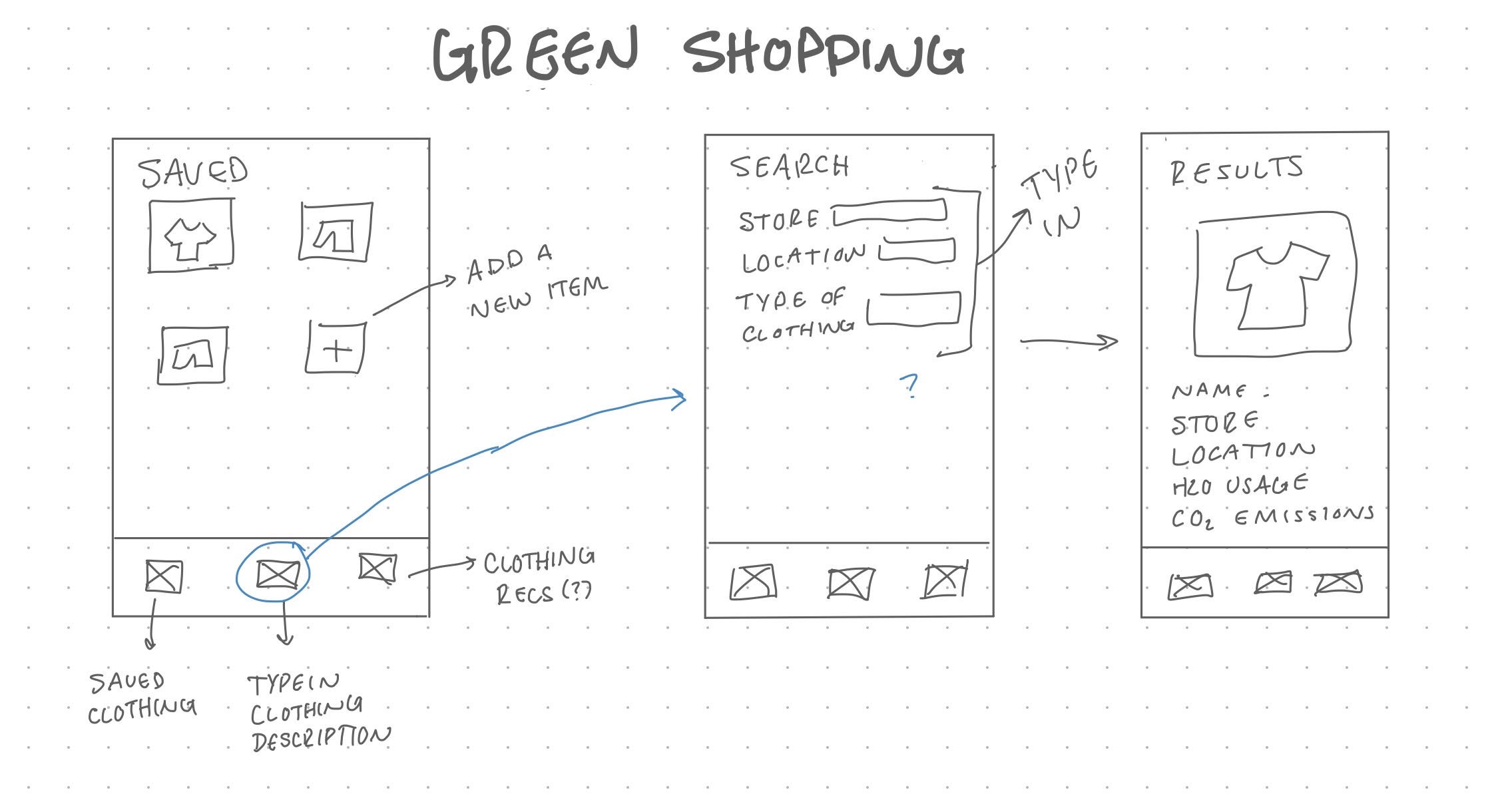

And with that I got to sketching:

While sketching, I quickly realized that the app's typing input function wouldn't be practical for users browsing clothes in-store. Typing in details for every item would be inefficient and could discourage users.

I almost shelved the idea until one day at a cafe, I saw how easy and fast it was to pull up a menu by scanning a QR code. This inspired me to explore QR codes further and discovered they could link to a wide range of information. This led me back to the drawing board (literally):

While sketching, I also stumbled across the world of AR while researching the technicalities of QR codes and considered combining AR and QR scanning to make the process more interactive and captivating for first-time eco-shoppers. With that, I sketched out possible designs for the AR interface that could appeal to various kinda of eco-shoppers:It was also at this stage where I started thinking about how to display the carbon emissions, water usage, and lifespan of the clothes. I figured that the best way to display the numbers in a way that could allow users to imagine how drastic the enviornmental footprint the clothes they purchase can leave on the enviornment was to compare them to every day items that we interact with frequently, which will be shown later on in the prototype.

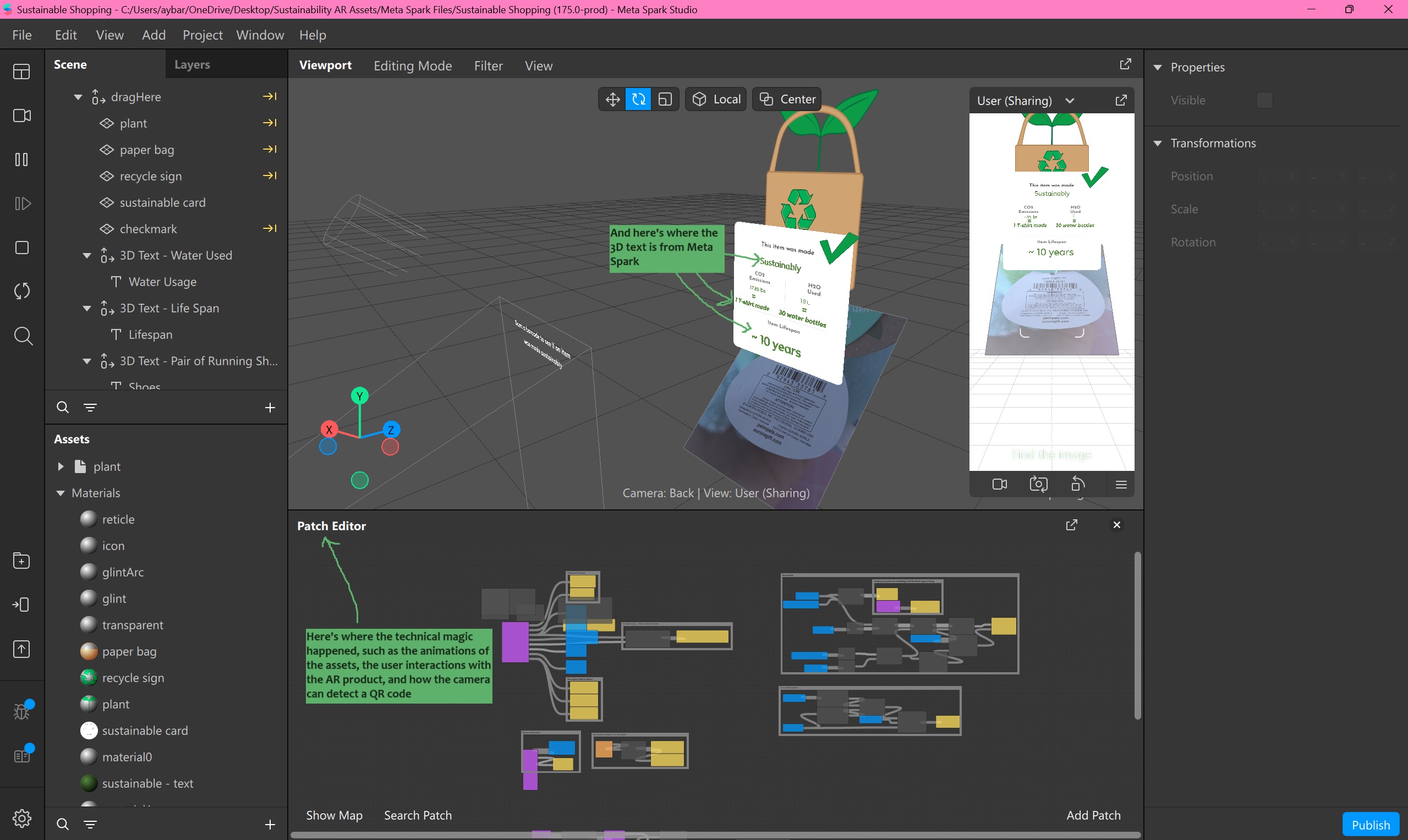

After looking for an AR editor, I found Meta Spark Studio to be the best AR program for the prototype given my limited resources. Initially, it was challenging, but I adapted quickly due to my previous experience with other 3D modeling programs.

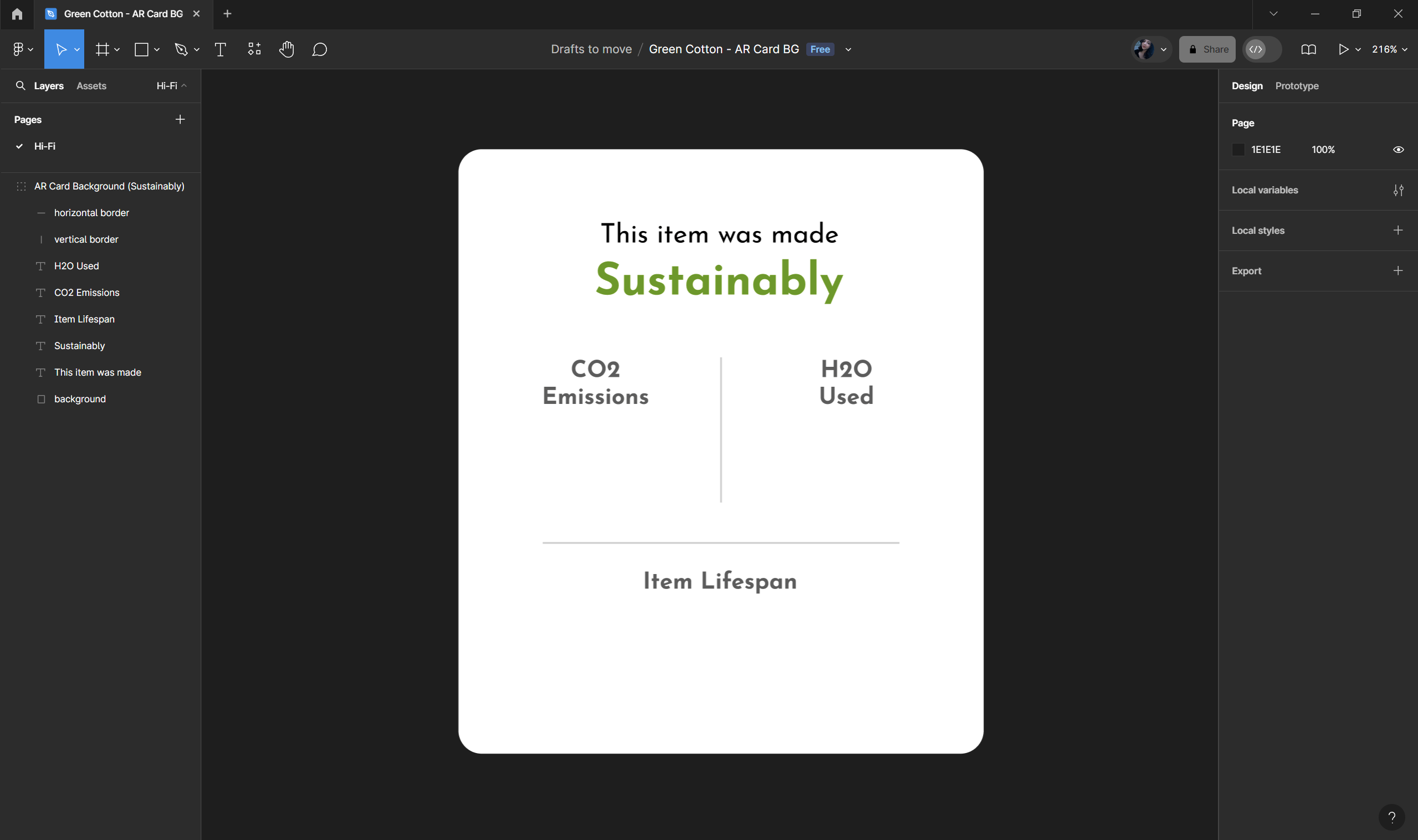

But because of the limited access there was to make and edit my own assets on Meta Spark Studio, I figured that the best way to make them was on other editing and prototyping platforms and export them onto Meta Spark.

So first, I made the flat card that would be used as the background of the AR effect on Figma:

and lastly, I brought in all of the assets into Meta Spark, added the 3D text with their built in text editor, added animations for the assets:

I tested the prototype with three users and received mostly positive feedback. However, I observed that all the users struggled a bit with scanning the barcode. Although they eventually managed to scan it without assistance, they mentioned this small issue with the first prototype.

Additionally, they pointed out that the text was too small and they couldn't zoom into the AR model since it was in a fixed position on the QR code.

To address these concerns, I went back into Meta Spark Studio and made adjustments based on their feedback. I resized the QR image to cover the entire tag for easier scanning and increased the size of the text and assets for better readability.

I presented the revised prototype to the same three users, and they all noted that it was easier to scan and more readable from a comfortable distance.

After completing the development of the AR Green Cotton experience, I eagerly submitted the project to an AR experience contest hosted at the Brooklyn Public Library by Jobs For the Future (JFF). I am thrilled to announce that the project was selected as one of the 5 winners out of 25 contestants (and I even won a VR headset!) This recognition reaffirmed the effectiveness and innovation of my design solution in addressing the challenges of sustainable shopping.

Winning the contest not only provided validation for my approach but also opened up opportunities for further development and implementation of the AR scanner in UX design. Additionally, I had the honor of being featured on the JFF blog, titled “AR for All: Learner Perspectives on Augmented Reality Training”. The blog advocated for colleges and universities to integrate AR courses and learning into their curriculum, citing my Green Cotton concept as an exemplary case. It's exciting to see my project making waves and inspiring broader conversations about the role of AR in education and sustainability initiatives.

This was my first project working with a medium that was not the traditional mobile device/desktop as a UX designer and it opened my eyes to the potential of new technologies and where UX can be involved in that. I would definitely want to expand more with AR products in the future, it’s a much more creative way of utilizing Figma and Illustrator. It was also interesting to see how integrated these programs are and I would love to implement their versatility in the future.